Mastering Data ,Where do processes fit in?

A failure to address service-oriented data redesign at the same time as process redesign is a recipe for disaster. Michael Blechar

On recent trips to client sites over the summer, the same problem has arisen regarding enterprise Business process Management (BPM )programs. I have repeatedly been asked the question “How do I achieve one clear version of the truth across multiple applications? How can I avoid complex data constructs in the BPM solution and do I need to build an enterprise data model to support the enterprise BPM program? It took me a while to piece the answers together, but a chance conversation with some colleagues about Master data Management yielded some pretty interesting results. But before I launch into possible solutions let's take a step back and ask, “How did we get to here”?

With their adoption of open standards, SOA, SOAP and web services, BPM solutions are excellent vehicles for creating composite applications. Organisations have naturally grasped the opportunity to hide the complexity of applications silos and eliminate error prone time-consuming re-keying of data. However they have embarked on these enterprise programs in a piecemeal fashion, one project at a time, often with no clear vision of their target operating model also ignoring what Michael Blechar calls siloed data.

Creating a single unified interface that contains all the data needed to complete a process can greatly contribute to a seamless and streamlined customer experience, meeting both employee and customer expectations. I have seen many examples of these apps in recent years.

However, customers who had embarked on enterprise BPM programs were finding, far from having simplified and streamlined processes, they were increasingly coming face to face with the problem of data. Data from multiple sources – different systems and differing formats, sheer volume – was not so much the problem as was duplication. While they are great at integrating and presenting data, with few exceptions BPM solutions are much less suited to managing data. How so?

In today's heterogeneous application landscapes organisations have multiple systems that hold customer, product, partner, payment history and a host of other critical data. First wave BPM implementations typically integrate to one or two systems and the problem of master data management is hidden. However, as the second and third wave of process implementations begin and the need to integrate with more systems carrying the same entities, the problem of synchronising the update of customer address or product updates becomes acute.

While BPM tools have the infrastructure to do hold a data model and integrate to multiple core systems, the process of mastering the data can become complex and, as the program expands across ever more systems, the challenges can become unmanageable. In my view, BPMS solutions with a few exceptions are not the right place to be managing core data[i]. At the enterprise level MDM solutions are for more elegant solutions designed specifically for this purpose.

So back to those customers I visited mid summer. I was asked, "how do I manage data across upwards of 30 systems, should I build an enterprise data model? It turned out that, a previous BPM project had tried to build an object model in the BPM platform, the model was both complex and difficult to manage. Worse still, the model was already unstable and did not yet account for the data structures across the multiple systems in the enterprise.

Intuitively I felt that trying to produce an enterprise data model was the wrong approach. Having a history of data modelling I knew that this could take many months to get right in a complex organisation and would ultimately delay the transformation program. Almost certainly if, the model was actually completed, the likelihood of it being accurate or relevant was pretty low.

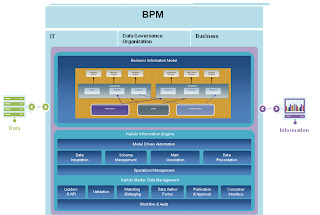

This led me to investigate where we had done this type of thing before and I found that on our most successful account we had encountered the same problem and engineered a technically brilliant but highly complex solution that was again difficult to manage. I began discussing the problem with my colleagues, Simon Gratton and Chris Coyne, from the Business Information Management (BIM) team, who had also encountered the same problem, better yet; they had begun to address it.

As we discussed the issue – they from a data perspective, me from a process perspective – a common approach emerged. The biggest problems of enterprise programs were that they failed to start at the enterprise level, and those initial projects focussed on too small an area, with the understandable and perfectly valid reason to get that “score on the door”. However when going further the business architecture or Target Operating Model (TOM) "provides a vision and framework for the change program; it acts as a rudder for the projects, helping the individuals involved make good decisions.” Derek Miers and Alexander Peters, without this rudder, projects can all too quickly run a ground.

This operating mode, also specifies the strategy and core processes that will support the new operation, these processes will imply a set of services (create customer, update address) and a set of data objects that are required. Once defined at this logical level the technical services and reusable components can also be defined. The core data objects once identified should be defined in a common information model that will support the core processes.

Working on a number of engagements the process has been refined into the following steps.

1 Create TOM with a clear vision and strategy

2 Defin the business operations and core business processes to deliver the strategy

3 Select 4 or 5 processes and identify the data entities required to support them

4 Create a common information model (CIM) with agreed enterprise definitions of the data entities

5 Identify reusable services that create, update or amend the entities (these become reusable business services)

6 Build a single process project 1 and populate the CIM with general attributes*

7 Incrementally develop the CIM over the life of the program building in additional entities required to support new processes

*Please note: some data attributes will be very specific to a process (e.g. insurance rating engine) which can be created only in the BPM solution. However, other attributes and entities will be applicable to the enterprise and so should be stored in the CIM.

Acknowledgements

I would like express heartfelt thanks to my colleagues and peers who through lively discussion, debate and violent agreement helped refine the ideas expressed in this paper.

Chris Coyne

Simon Gratton

Fernand Khousakoun

And external sources

Derek Miers and Alexander Peters

[i] BPM Solutions like Cordys and Software AG have BPM solutions fully integrated into their suites.