Alan Earls recently published a Good lists to what comprises good BPM governance, to this list I would also add;

I would also ensure that there is a clear enterprise architecture (EA) framework that supports your BPM initiative. Something like IAF or TOGAF can seem heavyweight but can save you a lot of time and you can / should implement the EA incrementally, project by project as you do with your BPM initiative.

|

Comments, observations, thoughts and predictions on Business Process Management.

Thursday, 1 December 2011

What makes good BPM Governance ...

Finally, someone who is taking a holistic view of collaborative BPM. See Paul Liesenberg's article on Architecting Collaborative Applications. Too many articles have focussed on Collaborative or social BPM as being just about creating the business process with some web 2.0 gimmickry.

Finally, someone who is taking a holistic view of collaborative BPM. See Paul Liesenberg's article on Architecting Collaborative Applications. Too many articles have focussed on Collaborative or social BPM as being just about creating the business process with some web 2.0 gimmickry. In fact as you quite right say, entire processes are about collaboration across people, departments systems and even organisations. BPM supports and is an integral part of a collaborative architecture. I am currently editing a piece on Collaborative BPM by one of my colleagues Marc Kerremans who talks about this trend.

Sunday, 13 November 2011

BPM and Adaptive Case Management their one and the same I’m afraid…

I feel like I need to rant, and I have been feeling this way for quite while, infact, ever since we started talking about Dynamic Case Management (DCM) or Adaptive Case Management (ACM) or whatever 3 letter acronym you like to call it, came on the scene.

The reason for my acute irritation is the pious articles proclaiming this new paradigm in resolving this hither too unknown work pattern. My contention is that Case Management, DCM or ACM is not new, it is completely within the definition of Case Management, I’ll concede that it has some specific characteristics that can be distinguished form traditional Case Management but in my view these are semantics and reflect the improved capabilities of BPM solutions to manage an ever broader range of work types that have always existed.

I wasn’t going to write this blog because I knew I’d become emotional however, after reading Bruce Silver’s piece on BPM and ACM earlier today, I just started blogging. Bruce starts off by setting the stage “ can a BPMS do a good job with case management, or do you need a special tool”. He then goes into a discussion of BPMN. How the latter relates to end user I’ll never, know, in the last five years of implementing BPM solutions none of my customers has ever begged me to use BPMN. Many have asked if it is necessary or a good standard to which I have replied, standards are always good but as long as you have a simple and agreed modelling notation that the project team agree on them really it doesn’t matter too much.

My real bone of contention however, is that case management has always been the sweet spot for both workflow and case management solutions. Filenet, Documentum and Staffware made millions from implementing solutions for insurance companies that allowed them to program quite rigid processes into workflow solutions providing better control and visibility. BPM tools with their improved integration and model driven architectures allowed simpler, richer and faster process design, providing real time reporting. These solutions raised the game for process automation causing the insurance companies who had previously invested in workflow solutions to quickly adopt the new BPM paradigm.

There was never any question, in the mind of customers, that what the new BPM solution provided was improved Case Management. It’s only when the pundit’s and no doubt the BPM vendors came along with new capabilities to sell, that we began to muddy the waters with definitions of Case management or more specifically ACM and DCM, that meant that you could now handle so called unstructured processes, allowing knowledge workers to change their processes on the fly, to route work to a new role or create a new activity not previously defined in the process.

For me processes have always lived on a spectrum from unstructured email conversations to highly structured insurance claims handling. A good example of unstructured processes are incidents, like an oil spill, or a call centre agent receiving calls about a faulty car where the brakes fail inexplicably for no reason. There is no prescribed process and the knowledge workers in these situations must figure how to resolve the issue, usually by discussion amongst a team of individuals who derive a policy with guidelines and a set of rules. The resulting process emerges through the interaction and collaboration. This process may never be documented, for example where the likelihood of the incident occurring a gain is so remote that it doesn’t warrant being codified in a programmatic solution.

Another example of unstructured processes are where the knowledge worker seeks to resolve an out of boundary condition, i.e. although it is an insurance claim the existing rules don’t specify what to do for this condition, or the knowledge worker may want to treat this high spending customer definitely to retain their business, but the rules don’t allow it. In order to resolve the case the knowledge worker must use their discretion, often by consulting with managers or if authorised using their own initiative, to determine what the appropriate course of action should be. In effect create an exception process on the fly. In this latter example the process may be codified and written into policy such that all customers meeting this criteria can be treated in a similar manner.

Real adaptive case management allows for this exception handling to be codified on the fly, so rather than resorting to email outside of the process to agree the change. The knowledge worker can create the steps that route the work to the relevant individual or alternatively create a new rule or even a data item to record the new decision type, rather than just record the action in the “notes”.

The fact that current BPM solutions can allow you to manage these latter scenarios, is proof positive that this is just an extension of case, the new steps can be included as an “alternate flow”. What I haven’t heard from any quarter is that you cannot use a BPMS to handle these ad hoc situations. In fact most vendors are falling over themselves to explain how elegantly they can handle these new use cases in the latest version of their solutions.

And this is why I get so angry, because we are talking about processes, cases that either need to be captured as a one off or need to provide the user the ability to refine the process on the fly however, in both scenarios we are still talking about a case. When the knowledge worker must create new activities, policies or rules within a process this is just an extension of platform capability, completely within the bounds of the latest BPM solutions.

So just for the record, processes of whatever type; ad hoc, structured or unstructured have always been with us, BPM and workflow solutions have always been able to manage them. Traditionally this was only in limited ways, by codifying them when the rules and activities became stable agreed policies and procedures.

In today’s world BPM solutions can handle more dynamic processes, by allowing knowledge workers, where authorised, to create new activities (policy and procedure), on the fly at run time. They are doing this with the same BPM, all be it enhanced, solutions that provide essentially the same core functionality, model driven development of processes, rules , SLA’s and Ui’s all without recourse to writing code. Yes the functionality has been extended somewhat, the complexity hidden away so that users can create more robust and compliant solutions more easily. They are still cases and that are still managed. Right I feel better now that I’ve got that off my chest, back to strictly come dancing!

Sunday, 30 October 2011

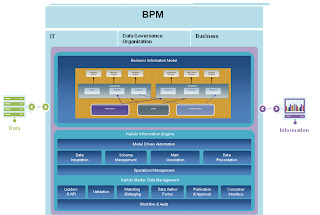

Mastering Data ,Where do processes fit in?

A failure to address service-oriented data redesign at the same time as process redesign is a recipe for disaster. Michael Blechar

A failure to address service-oriented data redesign at the same time as process redesign is a recipe for disaster. Michael Blechar

On recent trips to client sites over the summer, the same problem has arisen regarding enterprise Business process Management (BPM )programs. I have repeatedly been asked the question “How do I achieve one clear version of the truth across multiple applications? How can I avoid complex data constructs in the BPM solution and do I need to build an enterprise data model to support the enterprise BPM program? It took me a while to piece the answers together, but a chance conversation with some colleagues about Master data Management yielded some pretty interesting results. But before I launch into possible solutions let's take a step back and ask, “How did we get to here”?

With their adoption of open standards, SOA, SOAP and web services, BPM solutions are excellent vehicles for creating composite applications. Organisations have naturally grasped the opportunity to hide the complexity of applications silos and eliminate error prone time-consuming re-keying of data. However they have embarked on these enterprise programs in a piecemeal fashion, one project at a time, often with no clear vision of their target operating model also ignoring what Michael Blechar calls siloed data.

Creating a single unified interface that contains all the data needed to complete a process can greatly contribute to a seamless and streamlined customer experience, meeting both employee and customer expectations. I have seen many examples of these apps in recent years.

However, customers who had embarked on enterprise BPM programs were finding, far from having simplified and streamlined processes, they were increasingly coming face to face with the problem of data. Data from multiple sources – different systems and differing formats, sheer volume – was not so much the problem as was duplication. While they are great at integrating and presenting data, with few exceptions BPM solutions are much less suited to managing data. How so?

In today's heterogeneous application landscapes organisations have multiple systems that hold customer, product, partner, payment history and a host of other critical data. First wave BPM implementations typically integrate to one or two systems and the problem of master data management is hidden. However, as the second and third wave of process implementations begin and the need to integrate with more systems carrying the same entities, the problem of synchronising the update of customer address or product updates becomes acute.

While BPM tools have the infrastructure to do hold a data model and integrate to multiple core systems, the process of mastering the data can become complex and, as the program expands across ever more systems, the challenges can become unmanageable. In my view, BPMS solutions with a few exceptions are not the right place to be managing core data[i]. At the enterprise level MDM solutions are for more elegant solutions designed specifically for this purpose.

So back to those customers I visited mid summer. I was asked, "how do I manage data across upwards of 30 systems, should I build an enterprise data model? It turned out that, a previous BPM project had tried to build an object model in the BPM platform, the model was both complex and difficult to manage. Worse still, the model was already unstable and did not yet account for the data structures across the multiple systems in the enterprise.

Intuitively I felt that trying to produce an enterprise data model was the wrong approach. Having a history of data modelling I knew that this could take many months to get right in a complex organisation and would ultimately delay the transformation program. Almost certainly if, the model was actually completed, the likelihood of it being accurate or relevant was pretty low.

This led me to investigate where we had done this type of thing before and I found that on our most successful account we had encountered the same problem and engineered a technically brilliant but highly complex solution that was again difficult to manage. I began discussing the problem with my colleagues, Simon Gratton and Chris Coyne, from the Business Information Management (BIM) team, who had also encountered the same problem, better yet; they had begun to address it.

As we discussed the issue – they from a data perspective, me from a process perspective – a common approach emerged. The biggest problems of enterprise programs were that they failed to start at the enterprise level, and those initial projects focussed on too small an area, with the understandable and perfectly valid reason to get that “score on the door”. However when going further the business architecture or Target Operating Model (TOM) "provides a vision and framework for the change program; it acts as a rudder for the projects, helping the individuals involved make good decisions.” Derek Miers and Alexander Peters, without this rudder, projects can all too quickly run a ground.

This operating mode, also specifies the strategy and core processes that will support the new operation, these processes will imply a set of services (create customer, update address) and a set of data objects that are required. Once defined at this logical level the technical services and reusable components can also be defined. The core data objects once identified should be defined in a common information model that will support the core processes.

Working on a number of engagements the process has been refined into the following steps.

1 Create TOM with a clear vision and strategy

2 Defin the business operations and core business processes to deliver the strategy

3 Select 4 or 5 processes and identify the data entities required to support them

4 Create a common information model (CIM) with agreed enterprise definitions of the data entities

5 Identify reusable services that create, update or amend the entities (these become reusable business services)

6 Build a single process project 1 and populate the CIM with general attributes*

7 Incrementally develop the CIM over the life of the program building in additional entities required to support new processes

*Please note: some data attributes will be very specific to a process (e.g. insurance rating engine) which can be created only in the BPM solution. However, other attributes and entities will be applicable to the enterprise and so should be stored in the CIM.

Acknowledgements

I would like express heartfelt thanks to my colleagues and peers who through lively discussion, debate and violent agreement helped refine the ideas expressed in this paper.

Chris Coyne

Simon Gratton

Fernand Khousakoun

And external sources

Derek Miers and Alexander Peters

[i] BPM Solutions like Cordys and Software AG have BPM solutions fully integrated into their suites.

Thursday, 15 September 2011

Making Legacy Agile Controlled Migration

Controlled migration uses BPM solutions to "wrap" around ageing, inflexible, IT assets. The processes within these assets are exposed in a more flexible agile process layer. The processes can then be adapted to changing needs of the business without having to "rip and replace" core systems.

Controlled migration uses BPM solutions to "wrap" around ageing, inflexible, IT assets. The processes within these assets are exposed in a more flexible agile process layer. The processes can then be adapted to changing needs of the business without having to "rip and replace" core systems.

The problem with CRM, ERP and older mainframe applications is that they were not designed to be agile. CRM solutions are great data repositories but they support processes poorly, with users needing to navigate to the right screen to find the piece of information they need. Worse still, making changes to the original "data views" was a difficult, time consuming and costly exercise.

ERP Solutions fared little better as they were designed with a "best practice" process already installed. If the client wanted to amend or adapt this process it became a difficult, costly and lengthy exercise. Mainframes fare no better and were never designed to cope with today's fast changing process centric environments. Many applications were product centric and dictated a rigid hierarchical menu driven approach to accessing data and functions.

Other proprietary workflow or off the shelf products were never designed for integration and therefore became isolated islands of functionality and the only way to access them was to rekey information form one platform to the other, with the inevitable miss keying errors and delays in processing that result.

Modern enterprises are multi product customer centric organisations that require knowledge workers to execute multiple customer related tasks at the same time, with a backdrop of aging IT infrastructure, the challenges are huge.

By using a BPM solution like Pega Systems PRPC, the process is primary and the application is designed to present the right data to the right person, at the right time. The system guides the user through the process, as opposed to the user having to find the data or remember what to do next. Processes, products and even new applications can be added to an application in weeks or months rather than years.

Finally controlled migration is about protecting assets. The ability of BPM solutions to integrate more quickly with critical systems across the enterprise, to expose hidden processes and to facilitate change and adoption. By exposing functionality in the process layer organisations are able to extend the useful life of their IT assets which remain as systems of record or transaction processing engines. Over time more processes and business rules can be transferred to the more agile and flexible process layer while the underlying system can be safely retired or replaced. This "controlled migration" can be implemented with impact to business as usual.

Monday, 5 September 2011

MDM and Business Process Management

The convergence of BPM and MDM is nothing new Products like Cordys have featured MDM components for some time while BPM vendors such as Software AG (when they acquired software foundation's) and Tibco also include MDM functionality as parts of their BPM stack. " business processes can only be as good as the data on which they are based" according to Forrester analysts Rob Karel and Clay Richardson in September 2009 they noted that data and process are as inseparable as the brain and the heart. Karel and Richardson went on to emphasise that;

…process improvement initiatives face a vicious cycle of deterioration and decline if master data issues are not addressed from the outset. And MDM initiatives face an uphill battle and certain extinction if they're not connected to cross-cutting business processes that feed and consume master data from different upstream and downstream activities.

Richardson also noted in his review of Software AG's acquisition of Data Foundations "The only way master data can reduce risks, improve operational efficiencies, reduce costs, increase revenue, or strategically differentiate an organization is by figuring out how to connect and synchronize that master data into the business processes…." Clay goes on to say …..With this acquisition, Software AG acknowledges that the customers of its integration and business-process-centric solutions have a strong dependency on high-quality data. This move reflects a trend that we have identified and coined as "process data management," which recognizes the clear need for business process management (BPM) and MDM strategies to be much more closely aligned for both to succeed …"This observation is quite true, more recently two clients have posed the question of how to manage data across the enterprise when embarking upon a large scale BPM program. They have realised as Karel did that "To Deliver Effective Process Data Management... data and process governance efforts [will need]to be more aligned to deliver real business value from either."

The often discussed promise of adaptive processes or as Richardson remarked the ability to make "....processes much more dynamic as they're executing……. processes reacting to business events and able to adapt in flight." In order to make this happen clean accurate date is critical, other wise your processes are going to be adapting to unreliable or worse inaccurate data. The challenge according to Richardson is getting BPM and data teams to work together, research suggests that only 11% of master data management and business process management teams are co-located under the same organization or at least coordinate their activities.

Organisations like play core however have already begin to realise the benefits of aligning the two schools of thought. PlayCore is a leading playground equipment and backyard products company whose products are sold under the brand names GameTime, Play & Park Structures, Robertson Industries, Ultra Play, Everlast Climbing, and Swing-N-Slide.

Each of PlayCore's six business units has its own separate general ledger (GL) system, and PlayCore corporate has a seventh. Two of them use JD Edwards; three of them use Intuit QuickBooks, while the other two use Sage MAS 200 and MYOB.

PlayCore's challenges centered around:

- Consolidating overall performance results from the seven separate GL systems

- Manual, time-consuming effort to pull detailed information from the different GL systems into the BPM system

- Centralizing class, department, customer, product, account, and company master data.

Source Profisee.com

Sunday, 4 September 2011

BPM and mastering data across the enterprise

BPM solutions typically rely on core systems to supply or even master the data that they supply. In the BPM process data is retrieved, displayed and updated from those core systems as part of a business process.

This functionality can and in my opinion should be provided by a solution dedicated to Master data management.

BPM solutions provide the means to integrate processes across siloed business applications and departments. This also means mediating or in some cases creating a data model and attribute definitions between multiple systems and departments. Typically data models in different systems do not share the same definitions or same validation rules as more and more systems come on line during a large transformation program managing the data model becomes more complex and an ever increasing overhead to business users.

If not managed, maintained and updated the data model will no longer support current requirements and will be unable to grow to incorporate future systems as new processes come on line. This can result in the need to re-engineer the data model and the BPM applications that rely on them. Data migration of in flight work items is always difficult and time consuming causing increased disruption to the business and inevitable dips in customer service.

“The challenge for a BPM program is how to manage a consistent view of data across the enterprise applications that is now displayed through a single BPM solution”

Data for a BPM solution is often mastered in one or more sources systems ERP, CRM, SCM or homegrown applications. In some cases it may be the BPM solution itself and a separate data model is created for the BPM solution. The BPM solution typically retrieves displays and modifies data from source systems through the business process, the data must be updated in those source systems maintaining data integrity and data validation rules.

Data definition, integrity and validation rules are often different for each system, a BPM solution grows across the enterprise encompassing ever more systems. Manually attempting to manage a data model across multiple systems and components becomes physically challenging.

The Capgemini MDM solution comprises of tools that automate this process and provide model driven approaches to managing data including; automated sub-processes to perform data integration , data extraction, transformations, data quality routines to cleanse, standardize and parse data, find duplicates and manage potential matching candidates, validations and checks.

“MDM provides a model driven architecture for creating and maintaining a model across multiple systems”

The semantic model is maintained in a separate environment that is dedicated to the management of data. Although the business can author and update the model the complex data integrity and validation alignment across systems is managed automatically by the solution ensuring a much greater level of accuracy in data validation, with significantly less effort, ensuring faster time to market.

Source Cordys Master Data Management Whitepaper

The BPM solution must rely on trusted data sources used by business stakeholders to support those processes, Master Data Management (MDM) is the process of aggregating data from multiple sources, transforming and merging data based on business rules. According to Cordys (BPM and MDM supplier) an MDM solution must have the ability to:

• Find trusted, authoritative information sources (master data stores)

• Know the underlying location, structure, context, quality and use of data assets

• Determine how to reconcile differences in meaning (semantic transformation)

• Understand how to ensure the appropriate levels of quality of data elements

• Know the underlying location, structure, context, quality and use of data assets

• Determine how to reconcile differences in meaning (semantic transformation)

• Understand how to ensure the appropriate levels of quality of data elements

This functionality can and in my opinion should be provided by a solution dedicated to Master data management.

BPM solutions provide the means to integrate processes across siloed business applications and departments. This also means mediating or in some cases creating a data model and attribute definitions between multiple systems and departments. Typically data models in different systems do not share the same definitions or same validation rules as more and more systems come on line during a large transformation program managing the data model becomes more complex and an ever increasing overhead to business users.

If not managed, maintained and updated the data model will no longer support current requirements and will be unable to grow to incorporate future systems as new processes come on line. This can result in the need to re-engineer the data model and the BPM applications that rely on them. Data migration of in flight work items is always difficult and time consuming causing increased disruption to the business and inevitable dips in customer service.

“The challenge for a BPM program is how to manage a consistent view of data across the enterprise applications that is now displayed through a single BPM solution”

Source Kalido Master Data Management Technical Overview

Data for a BPM solution is often mastered in one or more sources systems ERP, CRM, SCM or homegrown applications. In some cases it may be the BPM solution itself and a separate data model is created for the BPM solution. The BPM solution typically retrieves displays and modifies data from source systems through the business process, the data must be updated in those source systems maintaining data integrity and data validation rules.

Data definition, integrity and validation rules are often different for each system, a BPM solution grows across the enterprise encompassing ever more systems. Manually attempting to manage a data model across multiple systems and components becomes physically challenging.

The Capgemini MDM solution comprises of tools that automate this process and provide model driven approaches to managing data including; automated sub-processes to perform data integration , data extraction, transformations, data quality routines to cleanse, standardize and parse data, find duplicates and manage potential matching candidates, validations and checks.

“MDM provides a model driven architecture for creating and maintaining a model across multiple systems”

Source Kalido Master Data Management Technical Overview

Data for a BPM solution is often mastered in one or more sources systems ERP, CRM, SCM or homegrown applications. In some cases it may be the BPM solution itself and a separate data model is created for the BPM solution. The BPM solution typically retrieves displays and modifies data from source systems through the business process, the data must be updated in those source systems maintaining data integrity and data validation rules.

The model driven approach enables the business user to define a semantic data model for the enterprise e.g. Customer , Product, Order, Payment, Service Request etc. Data management tools can interrogate and align the semantic model across multiple sources maintaining relationships and data integrity

The semantic model is maintained in a separate environment that is dedicated to the management of data. Although the business can author and update the model the complex data integrity and validation alignment across systems is managed automatically by the solution ensuring a much greater level of accuracy in data validation, with significantly less effort, ensuring faster time to market.

Subscribe to:

Posts (Atom)